An innovative way to improve search methods is to convert the documents into a knowledge graph. In documents, related information is often scattered across different sections. However, graphs provide multiple viewpoints on the information, allowing to see related information grouped together in one place. By simply traversing edges in multiple directions, searching is more intuitive and can be done more systematically. This makes it easier to navigate and manage large, complex data.

Admittedly, converting natural language into a graph is ambitious. It requires coming up with a user-friendly graph schema capable of representing the various sentences in the literature. For several reasons, here we explore an approach that builds a schema based on the same principles as natural language, aiming to address the complexity and scope of human language. Below, I provide a project overview followed by a detailed analysis of each development phase within the project.

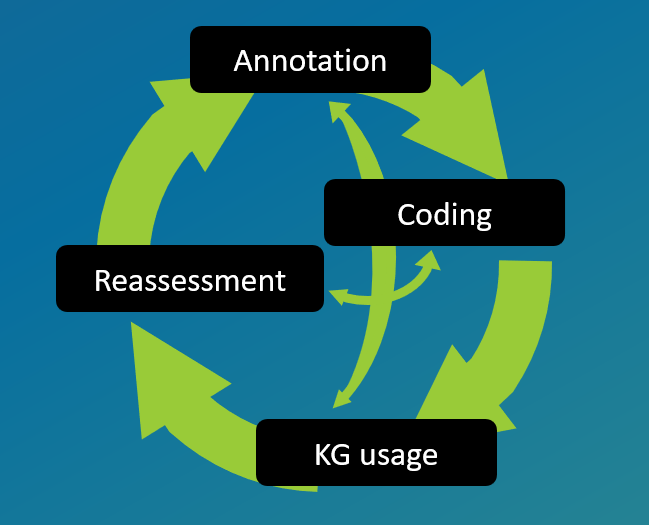

Project Overview

- Knowledge Graph Schema: Crafting a flexible schema to capture the vast array of information presented in scientific articles.

- Text-Graph-Interface Language: Creating the guidelines for the conversion of text into its graph representation.

- Fine-tuning of Language Models: Leveraging LLMs to automate the transformation of text into graph format.

- Benchmarking the System: Assessing the effectiveness of the knowledge graph in improving data retrieval and understanding.

Developing a Knowledge Graph Schema

The first step involves developing a schema that is capable of representing all the diverse information presented in various scientific studies. Information is diverse, and written language, using only a simple set of grammatical rules, has become a universal tool for representing this diversity. We believe we can endow our knowledge graph schema with similar capabilities through the carefully crafting of a new syntax based on entities and relations instead of punctuation and spacing.

The versatility of language comes from its vast vocabulary, and for an intuitive operation of the knowledge graph we also will use a vast vocabulary. Essentially, the schema, like language, will be constructed using a basic syntax enhanced by an extensive vocabulary.

It’s crucial for the knowledge graph to be user-friendly, as it’s intended to function as a search engine that doesn’t require users to undergo extensive training. Additionally, our schema must remain adaptable to keep up with evolving information and practices. Our design strategy also includes efforts to reduce redundancy and enhance connectivity, ensuring efficient retrieval of information. Click here for further information.

Developing Annotation Guidelines in Tandem with the text-graph notaton

Graph database files are too technical to serve as a good annotation format. Since annotations are primarily done by humans, the format should be intuitive, resemble natural language, be easy to edit and clearly show the graph structure. To bridge this gap, we need an interface that mediates between the two representations. This intermediary format can then be converted into the graph database format.

The development of such an interface language has recently gained momentum, but for the huge extent that we are requiring, we must further develop the ones proposed in the field of ‘Information Extraction’.

While the principles outlined above provide a solid foundation, imposing additional constraints can enhance the efficiency of creating the literature-graph translation system. Just like one English sentence can have several valid French translations, there can be multiple correct graph representations. However, having too many options complicates things for the graph application. By setting clearer guidelines, we can standardize these labels. Creating these guidelines requires careful consideration of all system constraints, making it a complex but crucial task. Click here for further information.

Finetuning of a Language Model (LLM)

Once the annotation guideline are consistent and the graph works properly as a search engine, the next step is to automate the text-to-graph translation. The ideal candidate for this task are Large Language Models (LLMs), as they have shown to be exceptionally good translators. The LLM’s main role is to interpret and organize vast amounts of unstructured text into a clear, graph-based format.

The LLM serves as the backbone of the automated process, enabling the system to handle the diverse and complex nature of scientific literature. To make this work, the LLM needs to be specifically trained for text-to-graph translation. Thus, we will extend the dataset created during the development of annotation guidelines by adding more sentence-graph pairs through manual effort.

Benchmarking the Knowledge Graph Search Function and the LLM

We will evaluate the KG based on its ability to make information hidden in text more accessible. The performance will be measured on the overlap between KG search results with other databases. This first benchmark evaluates the quality of the search result. While the improvement of the search process is our primary objective, this is difficult to measure quantitatively.

Next, we will use metrics like precision, recall, and F1-score to evaluate different tasks, including nested-relation extraction, relation classification, coreference resolution, entity linking, and inter-sentence relations. It’s important to note that our information extraction differs from previous event extraction, making direct benchmark adoption challenging. We will also sidestep the inter-annotator agreement metric, because it has limited relevance to our goals.

By following these steps, the project aims to revolutionize how scientific data is stored, retrieved, and understood, turning raw text into structured, searchable, and interconnected data.